2023-10-02 19:14 - How can I convert a text file to html as WYSIWYG? Asked chatgpt this was the output <!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <style> body { font-family: monospace; white-space: pre; } </style> </head> <body> <pre><xmp> TEXT </xmp></pre> </body> </html> Works but it doesn't account for text that includes html data itself - says add "xmp" tags Seems to work as expected - means anything in that block is not interpreted at all Goal now is remove that space at the top and add word wrap <style> body { font-family: monospace; } xmp { white-space: pre-wrap; } </style> Now make it so lines starting with spaces (code lines) are distinguished from regular lines echo "<!DOCTYPE html><html lang="en"> <head> <meta charset="UTF-8"><meta name="viewport" content="width=device-width, initial-scale=1.0"> <style> body { font-family: monospace; } xmp { white-space: pre-wrap; } </style> </head> <body><pre><xmp> `sed 's/^ \{1\}/\t/' "$1"` </xmp></pre></body></html>" This seems good enough for purpose as a compiler - simple enough and can be run on all journals But as "xmp" is technically a vulnerability how can I get around using that? Actually just replacing each character on compile seems to work fine - another sed command sed 's/^ \{1\}/\t/; s/</\</g; s/>/\>/g' "$1" Did a lot of testing with bold tags but it's all pointless.

2023-12-19 17:21 - Add it into a loop Need to add this to a loop to actually work with multiple files - adding a separate script find -maxdepth 1 -name "20*" -type f -exec bash compiler.sh '{}' \; Been experimenting with sed to close <pre> tags - all seems to give issues

2024-01-08 19:22 - Fixed the wrapping issue So I've figured out how to wrap only specific parts of a line in tags by grouping components Essentially you define groups of characters to look for (with brackets) and refer back to them s/(^\t|^\t.* )(# .*)/\1<i style="color: gray;">\2<\/i>/g # \1 = Capture lines either starting with "\t# " or "\t....... #" (up to but not inc. the #) # \2 = Any text after, and including, "#" # The effect is you can paste text exactly as before but wrap the "#" bit: "\1BEFORE\2AFTER" Remember what you're doing is capturing relevant text and using brackets to split it up And for simplicity there's no point using brackets unless you actually want to extract -e 's/&/\&/g; s/</\</g; s/>/\>/g' # Remove special html characters from text -e 's/^---.*/<hr>/g' # Format lines starting with "---" (as a big line) -e 's/^# (.*)/<b>\1<\/b>/g' # Format lines starting with "#" (headers) -e 's/(^http.*)/<i>\1<\/i>/g' # Format lines starting with http (links) -e 's/^ (.*)/\t\1/g' # Format code lines (starting with a space) -e 's/(^\t|^\t.* )(# .*)/\1<i style="color: gray;">\2<\/i>/g' # Format code line comments Also I wrote a nicer loop that only compiles files without a "!]" in it (to filter out drafts) find -maxdepth 1 -name "20*" -not -name '*!]*' -type f

2024-01-10 19:45 - Working on the scaling issues Not quite resolved the scaling issues on mobile but compiling now sets date values on files # Once file is compiled, just touch it with a new date, based on the filename touch -d "$(echo $newfile | cut -d' ' -f1) 12:00" ./html/"$newfile"

2024-01-30 18:40 - Rebuilt and extended So I've rewritten the compiler to be cleaner, do sed filtering a bit better and have rss Some interesting commands along the way: sed -n '/^# /p' "${1}" # Print out only lines in the file starting with "# " # Pipe this into another sed command or things will not work properly (due to -n) find -maxdepth 1 -name "20*.txt" -not -name '*!]*' -type f -printf '%P\n' | sort -r # Added this direct to the script - now just scans all files for me and prints them cleanly title=`echo ${rawfile%.*} | cut -d' ' -f2- | sed 's/ \[v.*\]//g; s/ !!//g'` # "2024-01-22 [active] RSS Repeaters [v002].txt" --> "[active] RSS Repeaters" # Essentially removes extention from filename, cuts to 2nd space and removes [v...] or !! # I may stop using this method of versioning anyway so likely not necessary sed -i '$ d' "${blogrss}" # Remove last line from a file Also to write this I used a neat command to save the compiler file sed -e 's/^/ /' compiler.sh >> FILENAME # It's all being version tracked anyway so safe enough

2024-02-01 13:50 - Trying to fix CSS of page So current it still doesn't scale how I want - uses text size scaling which is not ideal body { font-family: monospace; margin: 10px; padding: 0; } .container { width: 100%; margin: auto; } pre { white-space: nwrap; tab-size: 4; -moz-tab-size: 4; } i.codenote { color: gray; } Looks nice so far but wat to have mobile zoom to text width by default Okay I can accept mobile not working as I'd want - just going to settle for consistency Before I used viewpoint based font scaling but it worked only on mobile and messed up desktop Would do things like scale text to match zoom so you couldn't actually zoom Just going to avoid this and keep things fixed <style> body { font-family: monospace; display: flex; font-size: 15px; margin: 10px; padding: 0; } .container { width: 100%; margin: auto; } pre { white-space: nwrap; tab-size: 4; -moz-tab-size: 4; } i.codenote { color: gray; } </style>

2024-03-02 21:15 - Complete rewrite Completely rewrote this to work async and work using a reference file of hashes - 10x faster? Some key lines of value: # Show only the files not already tracked in the hash file failedHashes=`md5sum -c "$FILES_ALL" 2>/dev/null | sed -n 's/\(.*\): FAILED$/\1/p'` # Read a list of untracked files and save their hashes (I like the piping in this) while IFS='' read -r fileName; do md5sum "$fileName" 2> /dev/null done <<< "$failedHashes" > "$FILES_CHANGED" # Save all metadata into a single |-separated file with bash subprocesses and paste paste -d'|' \ <(cut -d' ' -f1 <<< "$dataFiles") \ <(sed 's/ .*//' <<< "$dataTitles") \ <(sed -E 's/.*\[(.*)\].*/\1/g' <<< "$dataTitles") \ <(sed -E 's/.*\[.*\] (.*)\.txt/\1/g; s/ //g' <<< "$dataTitles") \ > "$FILES_METADATA" # HASH|DATE|TYPE|TITLE # Read this |-separate file and save each value as its own variable IFS='|' read -r fileHash fileDate fileType fileTitle <<< "$fileLine" # Everything runs async so each process can run at the same time - wait till all is complete scanFiles makeIndex & makeRSS & makePages & wait So instead of looping through each file it just makes a list of files/metadata to look at Then all the "compiling" bit has to do is just sed the page - can all be done as async tasks

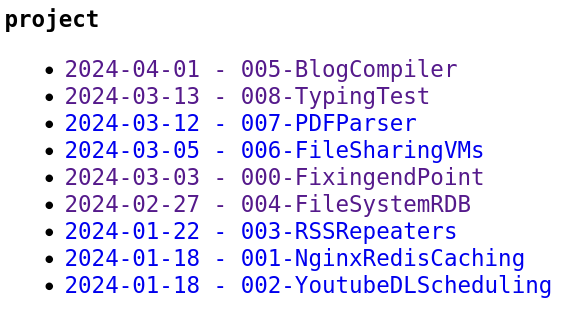

2024-04-01 18:06 - Sections I've been thinking how I can separate different articles cleanly for viewing No point moving to different folders - how about just indexing them differently So how can I group items differently in the index page? Currently it just adds line by line If I sort the original file by category then indexing automatically groups as I want sort -t'|' -k3 .metadata # Seems to do the trick while IFS=' ' read -r fileLine; do ... done <<< `sort -t'|' -k3 "$FILES_METADATA"` Now just need to just add a separator whenever there's a change in category [[ "$fileTypeOld" != "$fileType" ]] && indexfile="${indexfile}\n</ul><ul>" fileTypeOld="$fileType" 1: these lists are made next to each other not below. 2: these lists are not sorted by date Solved 1st by removing "flex" from css and adding "div" wrappers catbreak="" # Instead of adding to indexfile variable directly if [[ "$fileTypeOld" != "$fileType" ]]; then catbreak="\n<b>${fileType}</b>\n<div><ul>" [[ "$fileTypeOld" != "" ]] && catbreak="\n</ul></div>${catbreak}" fi Now how can I sort them such that they are grouped by category but also date sorted Turns out the sort command works kind of weird - need multiple "-k" commands sort -t'|' -k3,3 -k2,2 .metadata # Sort category then date sort -t'|' -k3,3 -k2r,2 .metadata # Sort category then reverse date (cleaner in this case) So now this lets me group things arbitrarily and index them in the order I want Okay how can I add in image support? I just need a reference symbol to point to a filename Format for images should be "DATE_TITLE" with any symbols inside each, for convenience It misses the point of my system if images can appear inline - at the bottom instead? Or, easier, just put a link to them:

Now I reference it by first copying all referenced images at the build stage:

grep -h --exclude-dir="*" "^~~~" * | sort -u | cut -d' ' -f2-

Actually now I think of it I should also hash this - and do it async to everything else

# Nothing crazy - just document which files to copy and copy them if possible AND necessary

function copyImages {

mkdir -p "${MAINDIR}/${IMAGESDIR}"

grep -h --exclude-dir="*" "^~~~" * | sort -u | cut -d' ' -f2- > "$FILES_IMAGES"

while IFS='' read -r fileName; do

[ -f "$fileName" ] || continue

newFile="${MAINDIR}/${fileName}"

[ -f "$newFile" ] || { cp "$fileName" "$newFile"; echo "Copied \"${fileName}\""; }

done < "$FILES_IMAGES"

}

# Run this async by calling it with &

Now I'm getting failures in the build stage because I threw together a compiling bit

's/^~ (.*)/<a href="\1">\1</a>/g' # What I tried - and is failing

# Ah the issue is the /a of course - do \/a - also change it to actually work

's/^~~~ (.*)/<a href="\1">\1<\/a>/g'

# It also needs to point backwards so adjust for that

's/^~~~ (.*)/<a href="..\\\1">\1<\/a>/g'

Build time is a bit longer now (due to that grep scan) - but can make it more efficient later

Actually let's try adding images inline instead

's/^~~~ (.*)/<img src="..\\\1" style="border: 1px solid black;">/g'

# Added the border too to make it stand out a bit more

# If I adjust that .. system I could even add reference images - but let's ignore for now

# I'll also worry about image dimensions later - when it becomes a more apparent issue

Now I reference it by first copying all referenced images at the build stage:

grep -h --exclude-dir="*" "^~~~" * | sort -u | cut -d' ' -f2-

Actually now I think of it I should also hash this - and do it async to everything else

# Nothing crazy - just document which files to copy and copy them if possible AND necessary

function copyImages {

mkdir -p "${MAINDIR}/${IMAGESDIR}"

grep -h --exclude-dir="*" "^~~~" * | sort -u | cut -d' ' -f2- > "$FILES_IMAGES"

while IFS='' read -r fileName; do

[ -f "$fileName" ] || continue

newFile="${MAINDIR}/${fileName}"

[ -f "$newFile" ] || { cp "$fileName" "$newFile"; echo "Copied \"${fileName}\""; }

done < "$FILES_IMAGES"

}

# Run this async by calling it with &

Now I'm getting failures in the build stage because I threw together a compiling bit

's/^~ (.*)/<a href="\1">\1</a>/g' # What I tried - and is failing

# Ah the issue is the /a of course - do \/a - also change it to actually work

's/^~~~ (.*)/<a href="\1">\1<\/a>/g'

# It also needs to point backwards so adjust for that

's/^~~~ (.*)/<a href="..\\\1">\1<\/a>/g'

Build time is a bit longer now (due to that grep scan) - but can make it more efficient later

Actually let's try adding images inline instead

's/^~~~ (.*)/<img src="..\\\1" style="border: 1px solid black;">/g'

# Added the border too to make it stand out a bit more

# If I adjust that .. system I could even add reference images - but let's ignore for now

# I'll also worry about image dimensions later - when it becomes a more apparent issue

2024-04-03 14:30 - Links and checks Just sorted out the line checking system - so lines over a certain limit are announced # Use awk to check length of lines and output what lines those are - flatten to 1 line # Then use sed to print this out cleanly, with notice of what file it is awk -v clen="${CHARLEN}" 'length > clen { print NR }' "$fileName" | \ tr '\n' ' ' | sed -E "s/^(.*)/$fileName:\n Lines too long: \1\n/" Now I want to make lines that are links work properly First of all - for that check script filtering out links is a good idea - pipe grep to awk grep -v -E -e "(# )*(http)(s)*(://)" "$fileName" | awk ... # As above I think I'll just do all links on new lines: %%% LINK % TITLE - like how images are ~~~ FNAME -e 's/^~~~ (.*)/<img src="..\\\1" style="border: 1px solid black;">/g' # Image version -e 's/^%%% (.*) % (.*)/<a href="\1">\2<\/a>/g' # Can't see why that wouldn't work This is the link to "endpoint" Works. So how can I ignore those lines in the test? Just ignore starting with %%% or ~~~ grep -v -E -e "^(# |)*(http)(s)*(://)" Also want to convert old style lines that are ONLY links to just be links too -e 's/(^http.*)/<i>\1<\/i>/g' # Before -e 's/(^http.*)/<i><a href="\1">\1<\/a><\/i>/g' So now if a line is JUST a URL it becomes a link - and if it's explicitly a URL it's a link Actually now I think of it there's no need for the starting %%% is there? Remove that -e 's/(^http.*) % (.*)/<i><a href="\1">\2<\/a><\/i>/g' # This now catches lines starting with http - and allows for nicer link names Links are now "http..." or "http... % TEXT" - much cleaner Now I can go through my links and tidy them without much work - and checker can stay the same a { text-decoration: none; } # Add a bit of css because html links are ugly underlined Now looking into how I can add gitlab pipelines to build this (just for fun) # .gitlab-ci.yml image: busybox:latest build: stage: build script: - curl -o compiler.sh https://gitlab.com/... # Link to "raw" code - chmod +x script.sh - ./compiler.sh # Is anything else really needed? # "curl: not found" of course - changing it to alpine might make more sense image: alpine:latest build: stage: build script: - apk add --no-cache curl - curl -o compiler.sh https://gitlab.com/... - chmod +x compiler.sh - ./compiler.sh # Again issues with packages missing - can't keep pushing changes - just going to run locally docker run -v ./blog:/text -it alpine:latest /bin/sh # Of course it just works in archlinux - grep and bash are different in alpine So that works - how do I keep the files it generates? build: script: ... artifacts: paths: ['html'] expire_in: 1 day Wow that actually works well - and I can view a full plaintext version of the website too